There has been a lot of discussion recently about the future of GNOME and the stasis that the project seems to have reached ((I dislike the term “decadence,” because it seems to imply abundant wealth going to waste. GNOME will never be the wealthy-person’s OS of choice. That would be OS X)). I think that stasis is not a horrible place to be. As others have said, having a desktop that is stable, useful, and predictable is a good thing. Developers have worked hard to get the desktop where it is, so let’s give them all a pat on the back before we discount all that’s been achieved.

But, as an application developer, I see some shortcomings to GNOME that is preventing further progress in user interface design. There are technical limitations, but there are also social limitations working against progress. On the technical side, GTK needs some changes to allow more developers to take user interaction to the next level. On the community side, there needs to be an official GNOME-sponsored forum in which to experiment free from criticism.

To enable developers to try new things, GTK may need to break ABI stability and move to version 3. I call it “3 for 3.0” — the three features GTK needs to move forward:

- Multi-input from the ground up: Right now GTK reacts to one event at a time. It’s possible to make an application look like the user is doing many things at once, but true multi-touch and multi-user interaction is not really possible — or at least it’s too difficult for moderately-skilled developers (like me) to achieve.

- First-class Animation: GTK needs to perform animation by default. There are ways to make widgets spin, slide, and fade, but they are all hacks. I should be able to fire off an animation and perform other functions while it is animating. I should get a signal when the animation is complete. Built-in state management would be a key feature. There should be a standard library of basic transitions and special effects that anyone can use with minimal code (like fade, push, wipe).

- 3D-awareness: GTK doesn’t need to be 3D itself, but it should understand and be ready for 3D. 2D apps will never disappear, but there will be a need for a bridge between 2D and 3D. This could mean that GTK would support a z-buffer, or perhaps it would have a blessed 3d-canvas like Clutter. Or perhaps it could have access to OpenGL to provide various compositing and shader effects.

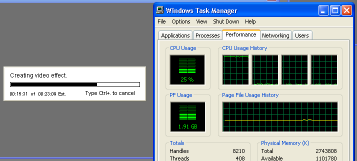

Perhaps some of these effects seem only useful for pointless flourishes that will slow down interactivity and increase processor overhead. I would argue that what GNOME needs right now is some stupid slow-ass eye-candy. Look at Compiz Fusion. It has dozens of gaudy effects, half of which are useless and most of which have way too many settings. But I love playing with it. It’s been a fertile sandbox for developers to go in and see what works. Maybe the “fire” transition is a waste of time, but there are a few Compiz features that are genuinely useful and I use all the time. The “enhanced zoom” feature, for instance, is a perfect way to blow up a youtube video without making it full-screen.

Every now and then I see a screencast from a GNOME developer working on a little pet project, and some of those demos have been amazing. Whenever I go to the GNOME Summit in Boston, there’s always some guy with his laptop, and he says, “take a look at this –” and proceeds to blow everyone away with some awesome thing he’s been working on. It’s rare, though, for those hacks to escape from that single laptop onto anyone else’s.

GNOME needs a Project Sandbox — an official, gory-edge (it’s past “bleeding”), parallel installable set of libraries and programs (“Toys,” perhaps? ((The exact terminology isn’t important, I’m just keeping the Sandbox metaphor going))) with all of the crazy hacks developers have been trying. It should be housed in a distributed SCM, so developers can push and pull from each other, mashing features and screwing around with GTK “3”, Clutter, Pyro, and whatever other toys people come up with.

The Sandbox should have two rules:

- No Kicking Sand ((alternate title: “No Pooping in the Sandbox”)) — ie, no stop energy. Anything goes, no matter how hacky. Developers should be able to prototype ideas quickly, no matter how cracked up they may be. The good ideas will stick and can be re-written cleanly. Nobody, not Apple, not Microsoft, not Nokia, knows what will really be useful in the future with multi-tap and 3D. Apple has a head start, but that doesn’t mean they have all the answers.

- Anyone Can Play: If I pull from your tree, I should be able to build and install what you’ve made. It does no good to have a toy if it only works in your corner of the sandbox. There might be some cases where a feature requires a certain video card, but developers should make a good faith effort to make code build and install on systems other than their own. Setting up a development environment is Hard, but I wouldn’t care if I needed 4 hacked copies of GTK each with different .so-names. Disk space is cheap! Computers are fast!

GNOME has done a good job of reining in developer craziness and promoting consistency and uniformity across the desktop. That was good, and was necessary while the desktop was maturing. Now it’s mature, and those reins need to be lifted, or at least relaxed. The stable desktop can plod forward steadily, but developers need a place to relax, rip off every feature from the iphone and Vista, and more importantly make that code public without fear of attack. What’s worse than a flame on Planet Gnome in response to a crazy feature? The feature that doesn’t get written for fear of being flamed.